Initial Design

Team Grassland Birds is working with the Smithsonian Conservation Biology Institute (SCBI) to locate a rapidly declining population of birds that nest in the grassland ecosystem. The team’s initial technical iteration involved mounting a FLIR Duo camera upon a 3DR Solo drone, a camera which has the potential to capture thermal and visual images simultaneously. However, after speaking with Martin Israel of the Remote Sensing Technology Institute, German Aerospace Center (DLR), the team recognized that the superior thermal resolution of the FLIR Vue Pro more adequately suited the project’s goals.

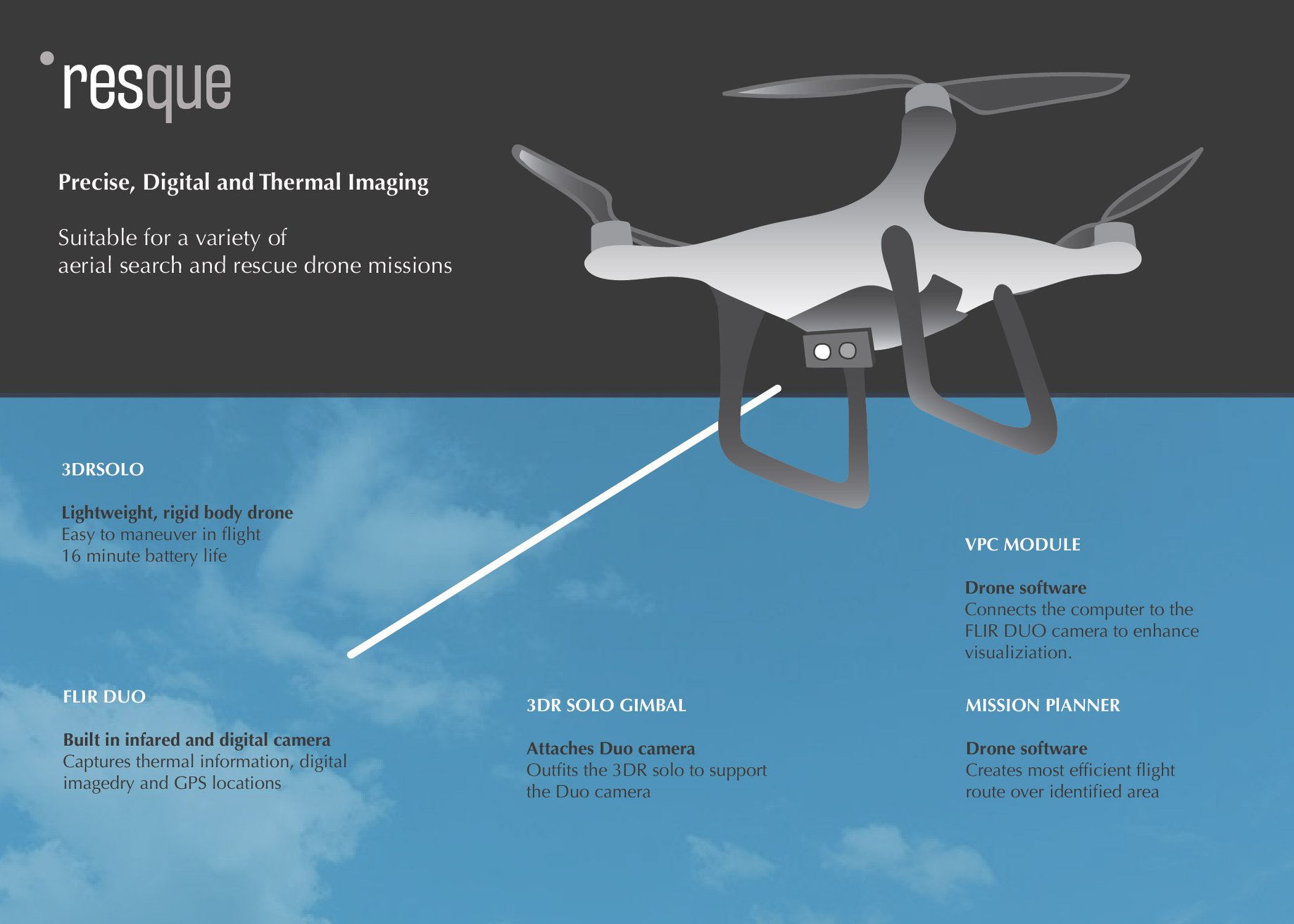

A GoPro Hero 4 was used instead of the FLIR Duo to capture visual images. Although the thermal imaging improved, pairing the FLIR Vue Pro with the GoPro proved to be problematic since both cameras could not be mounted on the drone at the same time, so the FLIR Vue Pro and GoPro lacked the ability to capture images in exact synchronization. Consequently, the team explored using photogrammetry software such as Pix4D to “stitch” the thermal and visual images together to create a map for SCBI that indicates exactly where bird nests are found in a field. Figure 1 illustrates an initial visualization of the technical bill of materials, including the 3DR Solo drone, the obsolete FLIR Duo thermal camera, the 3DR Solo gimbal, a VPC module, and Mission Planner software.

Figure 1. Initial visualization of the technical bill of materials, including the 3DR Solo drone, the FLIR Duo, the 3DR Solo gimbal, a VPC module, and Mission Planner software.

The team’s initial iteration also involved the use of a VPC module, drone software that connects a computer to the FLIR DUO camera, to access the “brain” of the FLIR Vue Pro. The thermal camera possesses “tailing filters,” which prevent the camera from registering differences in temperatures that fall beneath a certain range, such as 5%. The VPC module enables interfacing with the FLIR’s “brain” to remove such tailing filters. The team did not have the opportunity to experiment with the VPC module, as the FLIR Vue Pro received manufacturers’ modifications preventing manipulation of the camera.

Our first two attempts met with mixed success. During the first round of test flight, the team found themselves unable to capture thermal photographs from the FLIR Vue Pro as it was mounted to the FLIR gimbal, which is a stabilization system for the camera. The FLIR only captured video, which interfered with plans to “train” software to recognize similar thermal hotspots representing nests within a slew of images through the process of machine learning. To pivot, the team instead placed the GoPro upon the 3DR Solo gimbal, affixing the FLIR Vue Pro to the belly of the drone and manually controlling it via a mobile application. Given these difficulties, the team contacted FLIR’s manufacturer directly, who dispelled the notion that the FLIR gimbal only has the capacity to capture video, but can be used to capture photographs.

Experiments and Results

In an effort to determine the height to fly the drone for optimal image resolution, the team placed two hand-warmers (emulating the heat signature of a grassland bird nest) within the grass: one rested upon the top of the grass, while the other hand-warmer was covered in grass. The drone was flown at incremental heights within the range of 9 feet to 20 feet, and thermal images captured. See Figure 2 for a thermal image of the two hand-warmers and a water bottle for reference.

Figure 2. Thermal image captured upon the FLIR Vue Pro. The white square on the left-hand represents the hand-warmer on top of the grass, while the bright spot closest to the bottom of the frame represents the hand-warmer covered in grass.

To quantitatively analyze the optimal height to fly the drone for image resolution, the team used a software known as “ImageJ,” published by the National Institute for Health (NIH). This software analyzed pixel brightness of the hand-warmers as altitude changed, which the team found to work quite well.

Figure three illustrates a graph that shows the results of how high the drone can fly above ground while still being able to detect the hand warmers. As the graph notes, as the altitude of the drone is closer to 15ft, the quality of the hand warmers being detected increases.

Final Minimum Viable Product

The team’s final minimum viable product (MVP) is a drone equipped with two specialized cameras (Infrared and RGB). These cameras can be used interchangeably and swapped between primary and secondary depending on the type of mission. If a camera is designated as primary, the team can view the feed as the drone flies. If the camera is designated as secondary, the imagery is accessible post-flight. The reason the team opted to wield two cameras instead of one was so they could compile an overlay of the thermal and visual photographs. The latitude and longitude associated with each nest were geotagged and the coordinates pinned upon a map of the site. By doing so, the team was able to further enhance their ability to correlate details that may otherwise have been neglected. As of now, the team has been able to successfully fly and capture photos autonomously with the drone and further process the images captured by the two cameras. The team has been utilizing a photogrammetry software called Pix4D, which enabled the team to stitch photos together to create 2D and 3D models of testing locations.

Future Directions

If the team pursues the project further, they would like to modify the “brain” of the thermal camera to remove pre-existing filters. The thermal camera has the capacity to register temperature differences as it captures images. The filters reduce the sensitivity of the camera, lumping together zones of similar temperatures. As the temperature difference between a grassland bird nest and the surrounding terrain might be incredibly small, removing these filters will ensure that the camera captures temperature differences. Additionally, the team would like to modify the drone to utilize two camera stabilizers, otherwise known as gimbals. Presently, the thermal camera is mounted upon a gimbal, which enables users to view the camera feed in real-time. The GoPro is not on a gimbal, and the team can only view the images during post-processing. If a problem occurs with the GoPro in flight, the team will not know until after experimentation.

On another note, a significant aspect of this project requires the team to geotag locations of the identified bird nests. If the team can separate the images and post-process the images to determine the longitude and latitude of each point, the team can manually locate the bird nests within the one map. Otherwise, the team is interested in exploring the possibility of using machine learning to expedite the process of object identification. By going through redundancy tests of bird nests thermal photos, the team would be able to “teach” the program to find the object and identify them within photos. This would make the beginning to end process seamless and require minimal human involvement.